Is Web3's racial reckoning here?

Crypto, NFT, metaverse scandals press public media to think about new tech

While cryptocurrency has lost much of its luster, NFTs, the metaverse and all the other elements commonly associated with Web3 remain subjects of the mainstream imagination. These alternative industries, formats and spaces have been forecast to be an internet that will spark a fusion of in-person and virtual life. Yet, as with anything people create, bias can be inherent.

Anchored in free-market, free-speech absolutism, the overwhelmingly white decentralized tech movement has presented any number of challenges. More than a few issues are documented at spots like Web3 is going just great, where grift and malfeasance are meticulously laid bare. Elsewhere, the Bored Ape Yacht Club controversy and cynical subprime mortgage-style ploys have garnered rightful attention.

In spite of the problematic equity politics and scammy nature, public media has been circling Web3 for a while. Consider The Drop’s NFT venture and WFAE’s crypto wanderings. So, who better to learn about how Web3 intersects with equity and inclusion than an expert mind in the space?

Mutale Nkonde is an Emmy winning producer and the founder and leader of AI for the People, a firm that uses art, film and culture to help people imagine a world in which AI technology does not track, misinform or harm Black communities.

She started AI for People in 2019 after co-authoring a report, Advancing Racial Literacy in Tech, which called on the tech sector to pay closer attention to the impact predictive algorithms have on Black communities. In 2019, Nkonde worked as an AI Policy advisor and led a team that introduced the Algorithmic Accountability Act, the DEEP FAKES Accountability Act, and the No Biometric Barriers to Housing Act (reintroduced in 2021) to the U.S. House of Representatives.

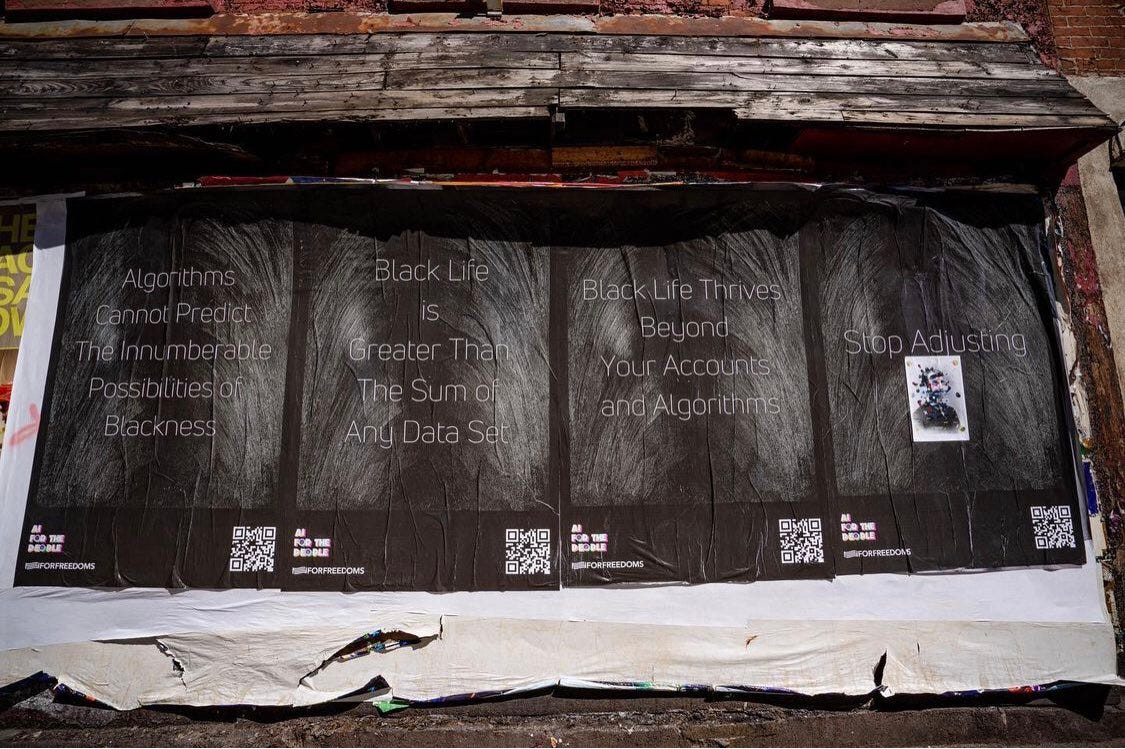

The inability for these bills to move out of committee convinced her of the need to increase policymaker understanding about the racial justice implications of tech. She then turned back to her work as a television producer to create media content that outlined these issues. Here’s one of the art campaigns:

Nkonde is a member of the TikTok Content Moderation Board, a fellow at Stanford University's Digital Civil Society Lab, and has formerly held fellowships at the Berkman Klein Center of Internet and Society at Harvard and the Institute of Advanced Study at Notre Dame. She is currently a Masters Candidate at Columbia University in New York where her research focuses on race and technology.

She started her career as a journalist. Between 2000 and 2008, she produced films about science and technology for the BBC, CNN and ABC. In 2008, she volunteered for Barack Obama's first campaign and there met people who asked her to work opposite Google's External Affairs team as they invested in a number of non profits offering coding education to Black children in New York City, where her interest in race and tech was born.

Let’s talk about equity and the emerging virtual world. 👇🏿👇🏿👇🏿

What got you involved in this movement?

I wanted to be a journalist. I specifically wanted to be a filmmaker and, for the first 10 years of my career, that's what I was doing. And I was doing it really well. As I came to the first 10 years, something called Twitter was invented. And I didn't know what it was. I didn't know where it was going, but I was really fascinated by it and got the opportunity to volunteer on then Senator Barack Obama's 2008 presidential campaign. And I was thinking, ‘you know, his name is Barack Hussein Obama. He's Black. He's not going to win. I don't know what Twitter is, but I'm going to go and volunteer a in a communications capacity.’ As a journalist, you have to be impartial. So that's just it. You can't really go back into newsrooms very easily when you've done that.

Coming out of the experience, I had met all of these people. And a couple of years later, I was asked if I wanted to come to what was called a hackathon. I had no idea what hackathons were. This is New York City where I'm based, a time when we were calling ourselves Silicon Alley.

It was through these kinds of volunteer hackathons that I started to learn about all of these different companies, including one called Google. That really led to being asked If I was interested in working with their external affairs team to bring in non-profits. This is like 2011, ‘12.

What equity in tech meant then was teaching Black kids and Hispanic kids how to code. What was really compelling about that for me was this idea that there was this skill that was going to become important in the future. That to be economically viable, you'd have to learn it. Great companies that we love so much were doing that work.

But, in reality, coding is not the gateway to riches. I remember telling young Black kids in New York City that they too could be Mark Zuckerberg. Thinking why I said that now is kind of embarrassing, but at that time that was my vision. It was an immigrant vision of success and upward mobility. And it was great. I mean, anybody that has worked in, around, or for Google knows that between the scooters and the free food and the amazing people that they bring in and the social cache of being involved in that, that you feel on top of the world. You feel like you're special – more special than all the other Black people in the world – and loving it until around 2015.

On the Twitter platform, a Black engineer tweeted out that when he put the search term ‘black teenager’ into Google search, he got mugshots, But when he put ‘white teenager’ into the search engine, white teenagers came up – white teenagers who were kind of just carefree in parks, having great fun.

It was very clear to me and to anybody that understood product design, that the products that we design are coded, developed, and conceived all by human beings. What that meant was for the picture to come up with mugshots, there had been a human being at the other end, who had decided that black childhood collaborated with black criminality.

And once the words, ‘black teenager’ and the picture of mugshots had been established, it had gone through various channels. It wasn't just that person. It had gone through managers. It had gone through supervisors and ultimately it had been deemed fit to release into the marketplace.

To be fair to Google, this isn't about Google having a bad search engine. These types of racist pairings can be found throughout technology, throughout the tech sector, throughout the products. And I was like, ‘oh my God, I have to do something about this.’ And then Weapons of Math Destruction was released in 2016 by Cathy O'Neil. She is a data scientist trained at Harvard who had 16 case studies of how algorithmic systems like Google search discriminated in job applications for anybody that applies online, and also discriminated in the public education system. She looked at how algorithms discriminated in the criminal legal system where judges were now using these similar types of machineries to decide who got bail and who didn’t.

Then Virginia Eubanks’ book, Automating Inequality, looked at how in public systems that give us unemployment relief or housing relief are systems discriminating against poor people. Then Sophia Noble, who got the MacArthur award this past year, released her book Algorithms of Oppression, which looked, again, at Google search. And it was just becoming clear that the technologies that we love, the technologies that we use, are also used in ways that discriminate against people of color and push these historical discriminations into the future because these are technologies that we're using all the time.

In terms of point of intervention, I thought in 2019 that I would start some type of organization that would deal with policy and do advocacy work. I'd been a Fellow at Data and Society, a research institution here in New York and had worked on what was to become the Algorithmic Accountability Act as well as a report called Advancing Racial Literacy and Tech. This was really a cry out to the industry to say that if we do not understand how our technologies intersect with our histories of race, racism and oppression, we’re only going to continue to create technologies that reinforce those types of discrimination.

Our first lobby day was going to be April, 2020, and then the world locked down.

This was the culmination of a decades of work. We had to pivot. And we pivoted back to what I knew, which was communication. We decided that the only way we can advocate was at the heart, not the head, level. We co-produced a short news item with an outlet called NTV here in New York. It was a story of how nuns were buying shares of Microsoft and using their power as shareholders to protest the development of facial recognition technology. In 2020, Microsoft even said that they were putting a moratorium on selling those systems to police following the death of George Floyd. We have another film we submitted to the Smithsonian Museum that looks at how social media is reshaping what we think of as citizens and individuals, which is called Blackness Unbound.

We’re really speaking to communities of color to tell those stories and let audiences understand how, whether you're using self-checkout, online proctoring as many students did when schools went online during COVID-19, or whether you're in hospital with pain medication, you're now interacting with these kinds of silent systems that are making decisions about your life.

Speaking to journalists, we encourage them to not tell the story of the technology and the data, but tell the story of the people and the impact of that technology, because that's the only way that we're going to get what we think is AI for the people. And that's really the only way that we're going to expand how we see humanity.

Much of the conversation around technology within public media is around the trends you've pointed out. How should organizations like public radio or public television consider exploring these digital areas?

I would advise the public media sector to think about their mission. If your mission is to serve the needs of the American people, it would be naïve and irresponsible to just present this view of the matter as something new. The problem is if we look at the way the metaverse is developing now, that means many of us do not have access to the headsets that would let us in.

A woman wrote a Medium post of how, when she entered the metaverse, her avatar — which was of a woman — was sexually assaulted. And the voice is coming from other avatars, saying that she should enjoy being raped. And what that really pointed out was the metaverse is a space in which we take the best and the worst of ourselves. And just as women are vulnerable in reality, we're also vulnerable in virtual reality.

Following that disclosure in February of this year, Meta put controls in place where you can control how close people come to you. If you're threatened, you can get away from that situation easily, but they didn't think about that on the front end.

And I think the role of public television is to think about that on the front end. What things would you not allow on our air? Should it not be allowed in immersive social space? We don't have the legislation. But we have the media makers and I think public radio and public television can start that conversation early. From a psychological perspective, we don't even know what the implications of assault with a headset are. These are new questions and public media should be asking.

What do you think are some of the biggest misconceptions about the new technologies?

Many leaders misunderstand the fact that the technologies that we have are developed and owned by private companies. Therefore, the primary stakeholder is the shareholder. That's what happens when your company is a publicly traded.

So, people say things like, ‘if I don't know something, I'll go to Google.’ As a technologist, I think, ‘well, Google is an advertising platform that uses your search terms to sell you more things.’ It's not an information provider. If you want new information, you should be going to a library because librarians have taken the charge of serving the public. Good. They're not going to give you the information that is the most viable. Or they're not going to give you information, but then also have an ad for a bail bondsman, which is what happened to a professor at Harvard Kennedy School who's also African-American, when she put her name into Google search.

Many leaders need to start thinking about who do these technologies serve? Why is it that we're using facial recognition primarily for crime? Why aren't we using that same recognition technology after brush fires in California to figure out what survived, and what didn't survive? We could still use that same underlying recognition technology to go in when the ground is too hot for humans. But that would be a public service. And that may not get you to profit, whereas selling facial recognition to police forces who will buy it for any price will earn you a profit.

The last thing that I'll say in terms of giving advice to leaders, assuming that technology is neutral is irresponsible.

The technologies that we have, many of them were conceived of through science fiction. If we look at the Motorola phone, which was the first cell phone that was ever marketed, the lead engineer used to watch Star Trek, loved the communications devices and said, ‘I'm going to create one of those.’ These are figments of people's imagination, but I feel like many leaders just think that there are these geniuses at these companies thinking about what we need. And it's like, ‘no, these are dreamers who are allowed to become doers because they have this particular type of education and the technologies.’ The privileged white men may not even conceive of gay people, non-binary people, disabled people, Black people, non-native English speakers, because they're not reflected in the labs where these technologies are developed.

Algorithms are not these faceless technologies that enhance our lives, and we don't sometimes acknowledge that these are the products of individuals with their own biases, their own blind spots, their own misconceptions of the world.

That's exactly right. What product managers are doing is thinking of a persona. So, who's going to use a product. And then designing for that persona. In the industry, the persona is always a woman from the Midwest. And what that's code for is a white woman, whereas in a city like Cleveland, Ohio, 50 percent of the residents are Black. In a city like Detroit, 78 percent of the residents are Black. Even when we think about who we're designing for, we're never designing for poor Black people or poor Hispanic people. We're always designing for a particular race, particular class, to solve their questions.

And dare I say, specifically as an immigrant to this country, one who was just naturalized in 2020, the terms of technology may be very different if I'm an immigrant, particularly if I'm an immigrant who's undocumented. Do I really need a technology that's recognizing me while under threat from ICE, versus if I'm a white woman from the Midwest?

There's an amazing book by a scholar called Sasha Costanza-Chock called Design Justice that comes from a trends perspective. The example that's used is going through an airport and how the machine has to label you. The central argument is these personas don't recognize people. They recognize the ideas of people. Specifically, white men who dominate the field of design thinking.

The technology is still being developed, and we can still impact the conversation. We just have to be bold and brave. 🟢

La próxima ⌛

The next summer-schedule OIGO is in your inbox August 5. We will explore ways organizations are creating belonging locally. Belonging begets trust and then engagement. We’ll unpack examples next time.

I’ll be on a pair of NABJ/NAHJ convention panels August 4. This includes a breakfast session on diversity and public media leadership. At 11 a.m., I’ll be on a panel on diverse audio. I expect to also facilitate an AIR meetup. Say hey if you’ll be there.

-- Ernesto

Cafecito: stories to discuss ☕

¿Quién Are We?, Colorado Public Radio’s new podcast on the Hispanic experience in the state, is out now. Here’s the sketch.

Barry Malone, deputy editor-in-chief for the Thomson Reuters Foundation, taps into something we covered last OIGO: the white editorial firewall. “The majority of journalists… are privately educated and come from privileged backgrounds. Too many are white. Too many are men,” he says. “But what bothers me is that there are so many journalists that are in denial about this.”

This story broke after the last OIGO, but still worth noting: more than a dozen Spanish-language stations, some in huge markets, are sold. Huge ambitions have been put forward, as have commitments to retaining old programming, so no telling what the outcome will be.

El radar: try this 📡

Offer Spanish, without translation. WNIJ’s Perspectivas is a commentary segment by and for Spanish speakers in Illinois. No translations. The story is how it came together is at America Amplified.

Get to know your community. Documented, a nonprofit journalism organization in New York City, is doing a news needs survey of Caribbean immigrants. Here’s what’s being asked.

Talk about area street vendors. Media in Fresno and Los Angeles are covering the latest incidents of assaults and killing of street vendors, the people in many communities who sell food from colorful carts and bicycles. The very nature of the business makes vendors vulnerable to attacks.

Employ listening before reporting. WHYY sent reporters into communities to listen for two months about gun violence without reporting. The result? New trust and relationships.

Ask why audiences are disinvested. The Dallas Free Press did that, and found curious results on news consumption. Almost half the respondents were Latino/a/e/x.

Thanks for reading.

Design Justice looks interesting. Thanks for the recommendation.